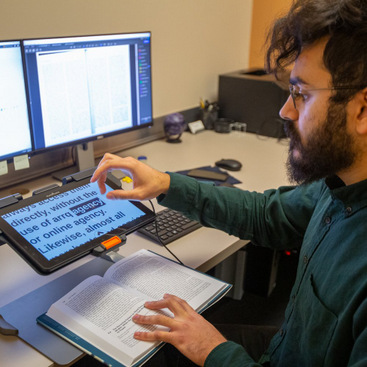

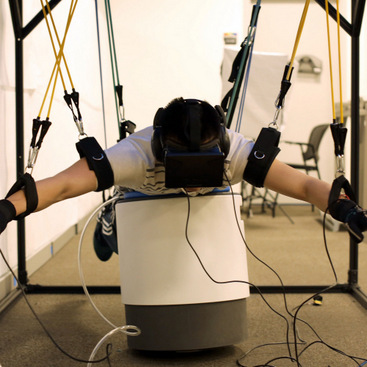

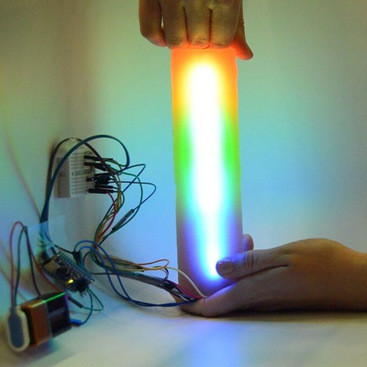

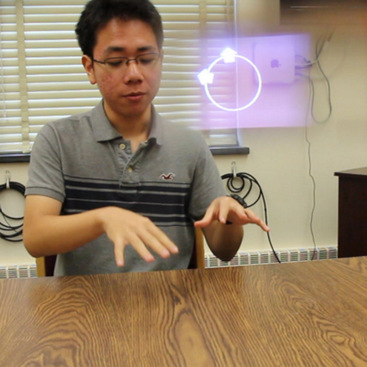

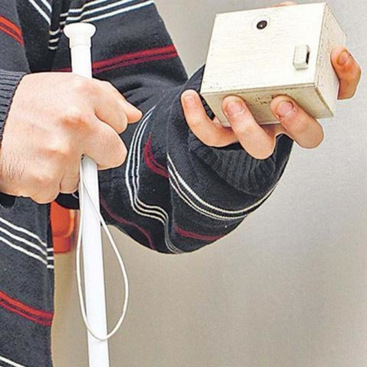

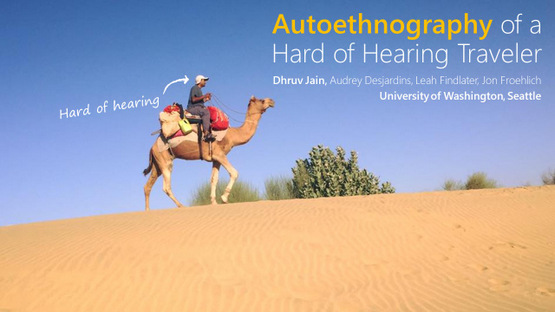

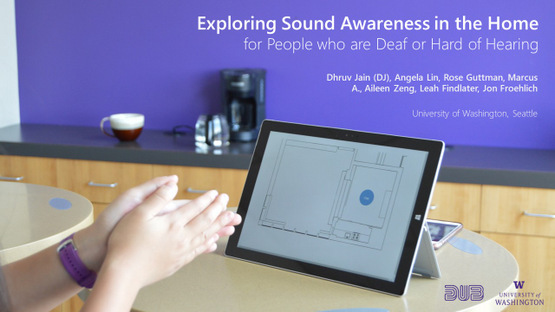

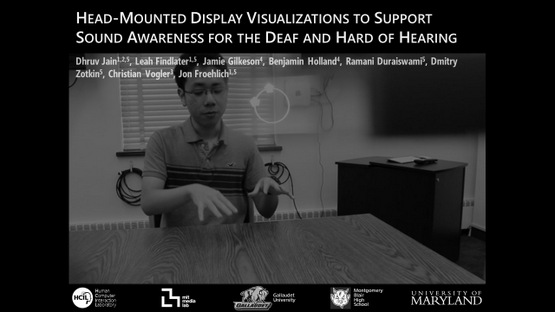

This upper-level undergraduate class serves as an introduction to accessibility for undergraduate studdents and uses a curriculum designed by Professor Dhruv Jain. Students learn essential concepts related to accessibiity, disability theory, and user-centric design, and contribute to a studio-style team project in collaboration with clients with a disability and relevant stakeholders we recruit. This intense 14-week class requires working in teams to lead a full scale end-to-end accessibility project from its conceptualization, to design, to implementation, and evaluation. The goal is to reach a level of proficiency comparable to that of a well-launched employee team in a computing industry. Often, projects terminate in real-world deployments and app releases.

Read more →