About us

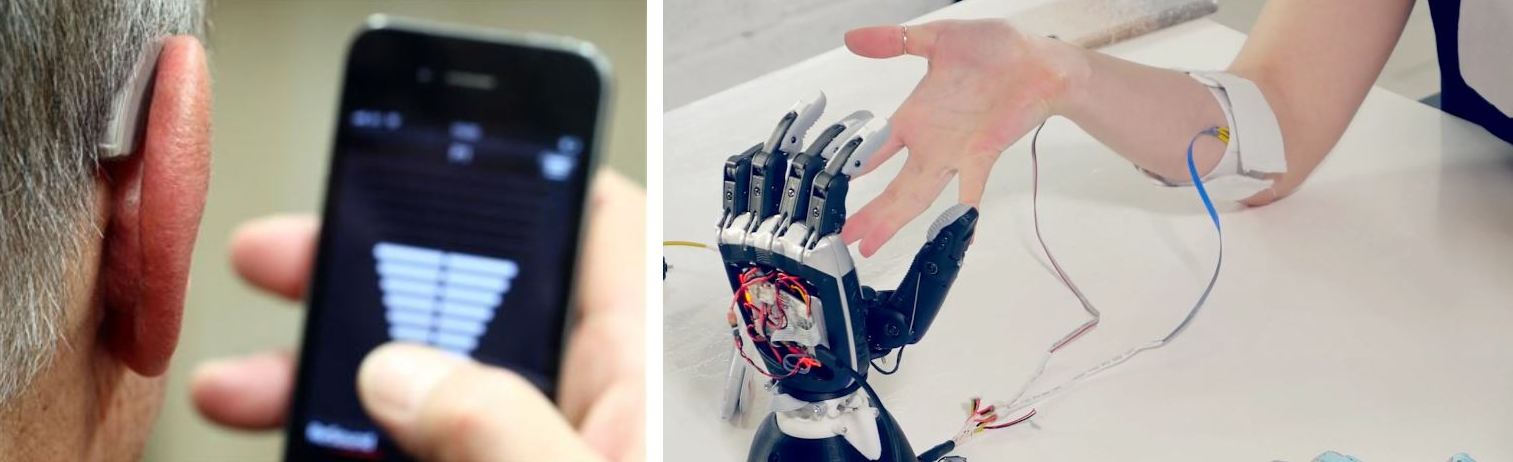

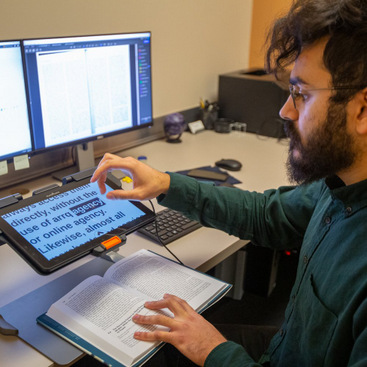

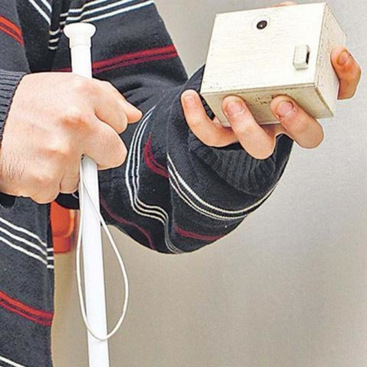

We are a research lab within the University of Michigan's Computer Science and Engineering department. Our mission is to deliver rich, meaningful, and interactive sonic experiences for everyone through research at the forefronts of human-computer interaction, accessible computing, and sound UX. We focus on the interplay between sounds (including speech and music) and sensory abilities (such as deafness, blindness, ADHD, autism, and also non-disabled people); our inventions include both the accessible delivery of sound information and using sounds to make the world more accessible and perceivable (e.g., audio-based navigation systems for the blind).

We embrace the term ‘accessibility’ in its broadest sense, encompassing not only tailored experiences for people with disabilities, but also the seamless and effortless delivery of information to all users. We focus on accessibility, since we view it as a window into the future, recognizing that people with disabilities have historically been early adopters of many modern technologies such as telephones, headphones, email, messaging, and smart speakers.

OOur team consists of people from diverse backgrounds, including designers, engineers, musicians, psychologists, physicians, and sociologists, allowing us to examine sound accessibility challenges from a multi-stakeholder perspective. Our research process is iterative ranging from designing to building to evaluation and deployment, and have often resulted in tangible products with huge real-world impact (e.g., one deployed app has over 100,000 users). Our work has also directly influenced products at leading tech companies such as Microsoft, Google, and Apple, has received paper awards at premier HCI conferences, and has been featured in leading press venues (e.g., CNN, Forbes, New Scientist).

Currently, with generous support from National Institutes of Health (NIH), Google, and Michigan Medicine, we are focusing on the following research areas:

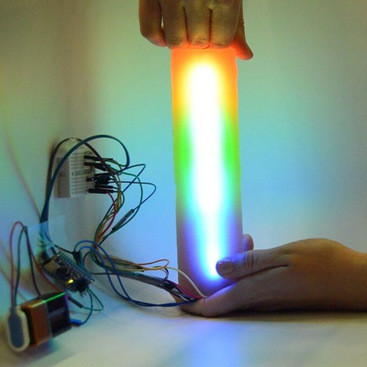

Interactive AI for Sound Accessibility. How can Deaf people, who cannot hear sounds, record sounds to teach and train their own AI sound recognition models? What sound cues will help provide holistic sound awareness to deaf and hard of hearing people and how can AI help? How can sound recognition technology adapt to changing contexts and environments?

Projects:

HomeSound

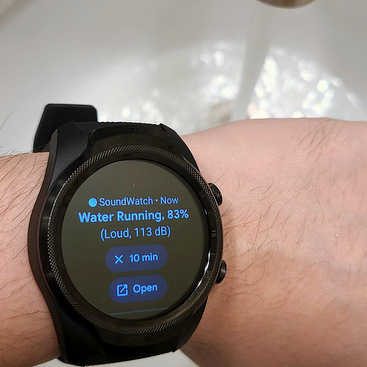

| SoundWatch

| AdaptiveSound

| ProtoSound

| HACS

| SoundWeaver

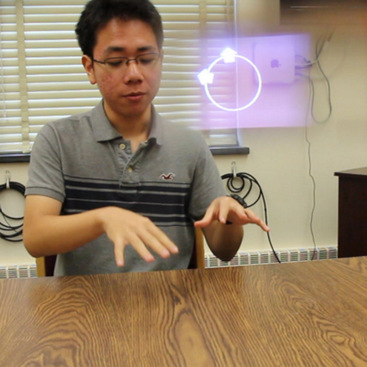

Personalizable Soundscapes and Hearables. How can multiple intrusive environmental sound cues be delivered seamlessly? How can we dynamically adapt music based on the user's environment and context of use? How can sound UI be designed to help autistic people manage hypersensitivity?

Projects:

MaskSound

| SonicMold

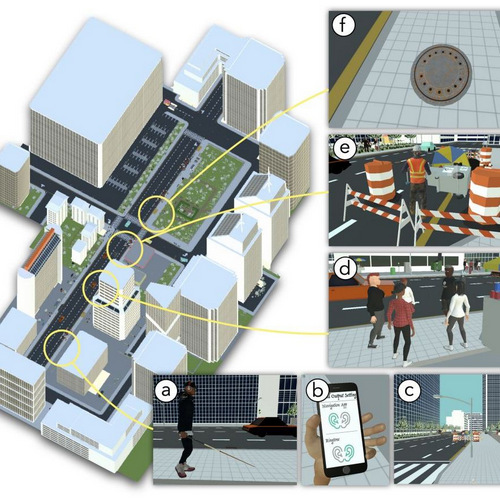

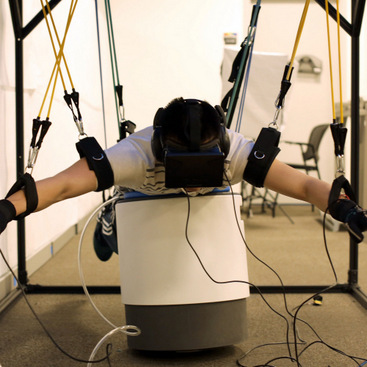

AR/VR Sound Experiences and Toolkits. What toolkits and guidelines can help developers integrate accessibility into their software and apps? Which rich sound experiences can the AR technology enable and how can we design them? How can we seamlessly blend sound from the real and the virtual world?

Projects:

SoundVR

| SoundModVR

| SoundShift

Medical Communication Accessibility. How can speech technology improve communication for Deaf/disabled people in healthcare settings? What interfaces and form factors are the most suitable for deployment of a technology in a high-stakes setting? What are the reactions of different stakeholders, including doctors, patients, and medical staff on these technologies?

Projects:

MedCaption

| HoloSound

| CartGPT

| SoundActions

We're always looking for students, postdocs, and collaborators. If you are interested in working in these areas with us, please apply.

Recent News

Jul 02: Two papers, SoundModVR and MaskSound, accepted to ASSETS 2024!

Jun 03: Alexander Wang joined our lab. Welcome, Alex!

May 22: Our paper SoundShift, which conceptualizes mixed reality audio manipulations, accepted to DIS 2024! Congrats, Rue-Chei and team!

Mar 11: Our undergraduate student, Hriday Chhabria, accepted to the CMU REU program! Hope you have a great time this summer, Hriday.

Feb 21: Our undergraduate student, Wren Wood, accepted to the PhD program at Clemson University! Congrats, Wren!

Jan 23: Our Masters student, Jeremy Huang, has been accepted to UMich CSE PhD program. That's two good news for Jeremy this month (the CHI paper being the first). Congrats, Jeremy!

Jan 19: Our paper detailing our brand new human-AI collaborative approach for sound recognition has been accepted to CHI 2024! We can't wait to present our work in Hawaii later this year!

Nov 10: Professor Dhruv Jain invited to give a talk on accessiblity research in the Introduction to HCI class at the University of Michigan.

Oct 24: SoundWatch received the best student paper nominee at ASSETS 2023! Congrats, Jeremy and team!

Aug 28: A new PhD student, Xinyun Cao, joined our lab. Welcome Xinyun!

Aug 17: New funding alert! Our NIH funding proposal on "Developing Patient Education Materials to Address the Needs of Patients with Sensory Disabilities" has been accepted!

Aug 10: Professor Dhruv Jain invited for a talk on Sound Accessibility at Google.

Jun 30: Two papers from our lab, SoundWatch field study and AdaptiveSound, accepted to ASSETS 2023!

Apr 19: Professor Dhruv Jain awarded the Google Research Scholar Award.

Mar 16: Professor Dhruv Jain elected as the inaugral ACM SIGCHI VP for Accessibility!

Feb 14: Professor Dhruv Jain honored with the SIGCHI Outstanding Dissertation Award.

Jan 30: Professor Dhruv Jain honored with the William Chan Memorial Dissertation Award.